If you’ve been exploring artificial intelligence or technology news recently, you might have come across GPT. Many people ask, what does GPT stand for and how it works. GPT is at the center of modern AI, powering chatbots, content generation, and other smart applications.

This guide explains the full meaning of GPT, its history, technology behind it, different versions, real-world applications, and answers frequently asked questions. By the end, you will understand why GPT is revolutionizing AI and digital communication.

What Does GPT Stand For?

GPT stands for Generative Pre-trained Transformer. Let’s break it down:

- Generative: GPT can create or generate content, from text and code to answers in natural language.

- Pre-trained: The model is trained on massive amounts of data before fine-tuning for specific tasks.

- Transformer: A type of AI architecture that processes language efficiently using attention mechanisms.

In simple terms, GPT is an AI model capable of understanding, generating, and predicting human-like text.

History and Development of GPT

GPT was developed by OpenAI, and it has evolved through multiple versions:

- GPT-1 (2018): Introduced the transformer-based architecture for text generation.

- GPT-2 (2019): Larger model with 1.5 billion parameters, capable of generating coherent paragraphs.

- GPT-3 (2020): Revolutionary model with 175 billion parameters, powering chatbots, virtual assistants, and creative writing tools.

- GPT-4 (2023): Multi-modal AI capable of understanding text and images, delivering more nuanced outputs.

- GPT-5 (2025): Advanced reasoning, adaptive responses, and broader context understanding.

Each iteration improved accuracy, contextual understanding, and versatility.

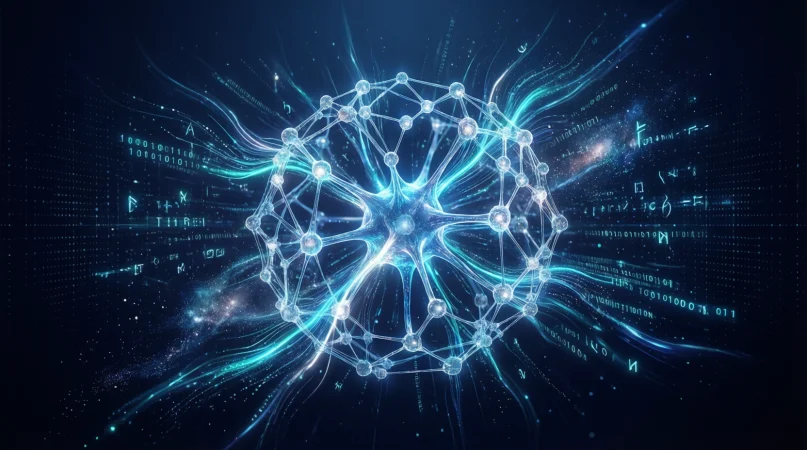

How GPT Works

GPT operates using transformer architecture with attention mechanisms, enabling it to understand the relationship between words in a sentence or paragraph. Key features include:

- Context Understanding: It reads the surrounding text to generate accurate responses.

- Pattern Recognition: Learns patterns from massive datasets for coherent output.

- Text Prediction: Predicts the next word or sentence based on context.

- Fine-Tuning: Can be customized for specific applications like chatbots or coding assistants.

In essence, GPT can simulate human-like conversations while producing meaningful content at scale.

Applications of GPT

GPT has a wide range of applications across industries:

- Chatbots and Virtual Assistants: Customer support, personal AI companions.

- Content Creation: Blog posts, articles, social media captions, email drafts.

- Education: Tutoring, answering questions, language translation.

- Coding Assistance: Auto-generating code, debugging, software development support.

- Healthcare: Patient triage, medical documentation, summarization.

- Creative Writing: Stories, poetry, scripts, and creative ideas.

GPT is revolutionizing how we interact with machines and automate tasks that require human-like intelligence.

Types of GPT Models

There are several versions and adaptations of GPT:

- Standard GPT: Base model trained on text data.

- ChatGPT: Optimized for conversations, question-answering, and dialogue.

- Codex GPT: Specially trained for coding and programming tasks.

- Multimodal GPT: Can understand and generate content in both text and images.

These specialized models allow GPT to serve multiple industries efficiently.

Advantages of GPT

- Produces human-like text

- Handles complex queries with contextual understanding

- Supports multiple languages

- Enhances productivity and creativity

- Scalable across industries and applications

Limitations of GPT

- Can generate biased or incorrect information if not monitored

- Lacks true human understanding or emotions

- Requires large computational resources

- May produce plausible-sounding but factually wrong content

Despite limitations, GPT is constantly improving with fine-tuning, reinforcement learning, and updates.

FAQs

Q1: What does GPT stand for?

GPT stands for Generative Pre-trained Transformer, an AI model for generating human-like text.

Q2: Who developed GPT?

GPT was developed by OpenAI, a leading AI research organization.

Q3: What is the difference between GPT-3 and GPT-4?

GPT-4 is more advanced, multi-modal, and understands context better than GPT-3.

Q4: Can GPT understand images?

Yes, GPT-4 and GPT-5 versions are multimodal, capable of analyzing text and images.

Q5: Is GPT used in coding?

Yes, Codex GPT specializes in generating and debugging code.

Q6: Is GPT free to use?

Some versions like ChatGPT have free tiers, while advanced versions require subscriptions or API access.

Conclusion

Now you know exactly what GPT stands for and why it’s a cornerstone of modern AI. From chatbots to content creation, GPT is transforming how humans interact with machines. While it has limitations, its applications are expanding across industries, making it a vital tool in the digital era.

Understanding GPT empowers you to leverage AI for productivity, creativity, and problem-solving in 2026 and beyond.